Introduction

Golf performance depends on a player’s ability to achieve physiological and psychological states appropriate for the execution of complex technical skills (Robertson et al., 2013; M. F. Smith, 2010). However, players encounter numerous challenges before and during competition that may interrupt adaptive states, such as extensive travel demands (e.g., jet lag, organisational stressors) (Reilly et al., 2007); unfamiliar cultural and climatic conditions (e.g., nutritional issues, physiological disturbances) (Heaney et al., 2008; M. F. Smith et al., 2012); and, high volumes of practice and competition (e.g., accumulated fatigue, injury risk) (Fradkin et al., 2007). Due to the complex nature of the swing and other fine-motor skills performed during play (i.e., putting), these factors can substantially affect scoring outcomes. There has been increased interest recently in the behaviours used by players to prepare for and manage such factors in the periods preceding tournaments; and, before/after each round (Davies et al., 2017; Pilgrim et al., 2018b, 2018a). Collectively defined as “tournament preparation”, these behaviours represent a relatively unexplored area of the golf science literature (Pilgrim et al., 2018a).

The Delphi is a consensus-based approach for developing guidelines or protocols in areas where there is a lack of established knowledge (Mokkink et al., 2010). This approach uses a panel of experts, responding anonymously to a series of questionnaires (i.e., rounds) with aggregate feedback used to facilitate consensus from the panel (Hasson et al., 2000). Studies in sport have successfully used the Delphi to develop a hierarchy of features for talent identification in soccer (Larkin & O’Connor, 2017) and officiating in rugby (Morris & O’Connor, 2017). One recent study used a two-round Delphi to develop the tournament preparation framework (TPF), which includes a list of items (i.e., behaviours) important for preparation in golf (Pilgrim et al., 2018a). The TPF is the focus of the current study and consists of 46 items that display importance relative to five participation levels from the Golf Australia athlete pathway. The Golf Australia pathway is based on the Foundation, Talent, Elite and Mastery (FTEM) model (Gulbin et al., 2013) and is represented by four macro and 10 micro phases: Foundation (F1-F3); Talent (T1-T4); Elite (E1-E2); and Mastery (M1) (Gulbin et al., 2013), with the TPF including levels T3-M1 (see Figure 1).

The TPF includes both task-specific and self-regulatory items. Self-regulatory items include goal setting and planning, gathering task-relevant information, seeking social assistance, and self-reflection. For the task-specific items, behaviours include completing practice rounds, self-correcting the swing, and implementing physical and mental preparation routines (Pilgrim et al., 2018a). The TPF provides detailed information with which to develop education programmes and curriculum for national sport organisations. Further, it presents easily accessible content that coaches and players can use to develop individual preparation routines. Collecting systematic tournament data from the player’s perspective is also crucial for promoting self-regulatory behaviour and gradual performance progression. Web-based software programmes that enable players to self-report statistical data using smartphone devices have become popular in recent years. Still, currently, no self-report version of the TPF is available. Such an instrument could allow players to capture data relating to preparatory behaviours/cognitions and integrate them with other performance data (i.e., strokes, statistical indicators).

Research in disciplines such as medicine (Compton et al., 2008), clinical psychology (Rytwinski et al., 2009), and quality of life research (Jensen et al., 2005) has led to the development of self-report versions of frameworks and psychometric scales. For an instrument to be truly useable for players, coaches, and researchers, and to receive uptake in the field, it should display suitable measurement properties (i.e., reliability, validity, and responsiveness) (Robertson et al., 2013; Terwee et al., 2010). Such information provides users with confidence in the instrument’s quality and the conclusions drawn from its applications (Robertson et al., 2013). A commonly evaluated measurement property is validity – that is, the degree to which an instrument truly measures the construct it purports to measure (Terwee et al., 2010). Several validity types are relevant for evaluating instrument quality, such as criterion and construct validity (Robertson et al., 2013, 2017; Terwee et al., 2010). Criterion validity provides strong evidence of instrument validity and is assessed by comparing an instrument with a gold standard (Terwee et al., 2010). If no gold standard exists (as with tournament preparation), then construct validity can be assessed. Construct validity refers to whether an instrument adequately represents a given construct and comprises two sub-types, being convergent and discriminant validity (Robertson et al., 2013; Scholtes et al., 2011). The assessment of convergent and discriminant validity is a crucial part of the instrument validation process. Convergent validity can be defined as the degree to which different measures of a construct that should be related, are in fact, related (Robertson et al., 2017). As a first step towards establishing a high-quality, self-report instrument for capturing preparatory behaviours, this study will focus on the development and convergent validity of the TPF-Self-Report (TPF-SR) instrument.

Self-report measures are inherently vulnerable to measurement error due to unconscious (e.g., recall error) and conscious bias (Ekegren et al., 2014). Conscious bias may reflect efforts to respond in a socially desirable fashion by over- or under-reporting specific responses, essentially “faking good.” Studies in the public health and policy fields have compared self-report responses with those obtained from other measures (e.g., direct observation) to evaluate their validity (Northcote & Livingston, 2011; Slootmaker et al., 2009). In golf, self-report measures have been used to collect information on player’s practice volumes over a period of weeks and compared with retrospective accounts from interviews to determine such measures’ validity (Hayman et al., 2012). Indeed, assessing the level of agreement between two measures of the same construct can provide an indication of an instrument’s convergent validity. More specifically, if the agreement between measures is high, then the measurement error is low; thus, providing support for validity (Scholtes et al., 2011). One of the challenges in evaluating the measurement properties of the TPF-SR instrument is that the original framework included items that are both overt (i.e., directly observable) and covert (i.e., not directly observable). In this case, multimethod approaches can be used, which combine two or more quantitative or qualitative methods for complementary purposes (Hesse-Biber & Johnson, 2015). This study describes the development and preliminary validation of the TPF-SR instrument and constitutes a first step towards establishing a high-quality self-report version of the TPF. The specific aims of this study are to (1) develop a self-report version of the TPF (i.e., TPF-SR) and (2) to evaluate its convergent validity using a multimethod approach.

Method

This study comprised two phases of data collection. In Phase One, items from the TPF-SR instrument were used to develop (a) observational coding checklists with which to directly examine overt items and (b) structured interview checklists for covert items. The coding and interview lists were then used by researchers to evaluate player’s tournament preparation behaviours during an international tournament. For Phase Two, players self-administered the TPF-SR instrument to record their behaviours during tournament preparation for tournaments played between July and October 2017.

Recruitment and Participants

Inclusion criteria for the study required that players had a World Amateur Golf Ranking were (a) current members of Australian state high-performance programmes, and (b) 16 years of age or older. Participants were recruited through the authors’ industry contacts and professional networks. Participants for Phase One (i.e., observation/interviews) were 12 elite amateur players. For Phase Two (i.e., self-report) participants were 18 elite amateur golfers, 12 of whom participated in Phase One.

Instruments

TPF-Self-Report (TPF-SR)

TPF-SR was developed by the authors of the TPF (Pilgrim et al., 2018a). The wording for each item was altered slightly from the original framework to allow for a binary “yes/no” response format. For example, the item “structuring technical/shot practice relevant to the playing conditions of the tournament course” was changed to “did you structure your technical/shot practice relevant to the requirements of the tournament course?” In some cases, items were separated using “a” and “b” divisors to allow players to record responses for each item component. For example, the item “mapping the course to identify the important features/details and using this information to develop a strategy for the course” was separated into “did you map the course” and “did you develop a strategy or game plan for the course?” An option for items that were contextual or “not applicable” (NA) in certain competitive situations was also included. For example, the item “did you use any strategies for in-flight nutrition or hydration?” was specific to long-haul travel, therefore if the tournament was local, “NA” was selected. The final TPF-SR instrument comprised 51 items across three sub-sections (see Table 1): (1) before the tournament; (2) during the tournament; and, (3) after the tournament. Item 42a “have you played the tournament course” was considered a filter question for Item 42b “If yes, did you spend less time on course mapping and more on shot practice or playing the course than if you’d never played it before?” and not representative of an item from the TPF – thus, it was included in the checklist, but not in the analysis. The web-based software, Trello (2018; Atlassian, Sydney, NSW, AUS), was used to administer the checklist.

Observational coding checklist

The checklist used for observational coding was adapted to include items from the TPF-SR that reflected behaviours that were overt and directly observable (3/51).

Structured interview checklist

Items from the TPF-SR reflecting behaviours that were covert and not directly observable (48/51) were used to develop a binary “yes/no” structured interview checklist.

Procedure

Instrument pilot testing

Before data collection, the TPF-SR was piloted with three amateur golfers competing in a local club tournament. Feedback from pilot testing was used to make adjustments to the instrument, for example, the wording for several items was shortened to enhance readability.

Observer training and reliability

Observations were completed by three trained observers who participated in a two-hour training session (led by the first author) to familiarise them with the checklist instrument and establish agreement on how items should be coded. After initial training, all observers – alongside the first author – concurrently coded eight players during practice rounds at the Riversdale Golf Club. Cohen’s Kappa (k) statistic was used to quantify the level of inter-observer agreement for players’ behaviours. Kappa was computed for all coder pairs (range = 0.5 – 0.9; mean across all pairs of coders = 0.7), thus indicating good agreement between observers (McHugh, 2012).

Phase One: Observational coding and structured interviews

A multimethod approach was used to assess player’s tournament preparation behaviours during an international tournament (March 8-12, 2017) at the Riversdale Golf Club, Mount Waverley, Victoria. First, three trained observers and the first author were assigned to separate areas of the course, including the practice range, putting green and clubhouse. Data were recorded on-site by observers, using the observational coding checklist to indicate item endorsement; that is, whether the item was performed. Second, structured face-to-face interviews were used to elicit information from players (i.e., endorsement that the item was performed). Interviews were conducted immediately (within 20 mins) after a round in a quiet area of the clubhouse and lasted an average of 20 minutes.

Phase Two: Self-report

Verbal and written information explaining the purpose of the research was delivered to players through scheduled education sessions conducted by the first author at a Golf Australia training camp (July 2017). Players targeted for recruitment that were not in attendance were sent the research information via electronic mail. A total of 22 players agreed to participate in the study, and four did not complete any of the checklists – resulting in a final sample of 18 players. Players were asked to create a user account for the software Trello that could be accessed by either web- or mobile-based applications. Each player was allocated several “boards,” a data entry space that allowed players to indicate “y/n” for items from the TPF-SR instrument. The checklist remained open on Trello from July to October 2017. Following this period, participants’ responses were exported for analysis. The relevant Human Research Ethics Committee provided ethical clearance for the study.

Data analysis

Phase One

Descriptive analyses of the checklists used for observational coding and structured interviews were undertaken by aggregating the total number of item endorsements (“yes” responses) after “NA” responses were excluded. These data were then used to calculate the mean endorsement rate (i.e., percentage of “yes” responses) for each of the instrument items.

Phase Two

Analyses of player’s self-administered TPR-SR checklists was performed by computing the mean endorsement rates (as above). Mean endorsement rates for self-report were then compared with those from the multimethod approach (observation/interviews) to determine the percentage agreement and differences between the two measures.

Results

Participant characteristics

The demographic and descriptive characteristics of players from Phase One and Phase Two are provided in Table 2.

The convergent validity of the TPF-SR instrument

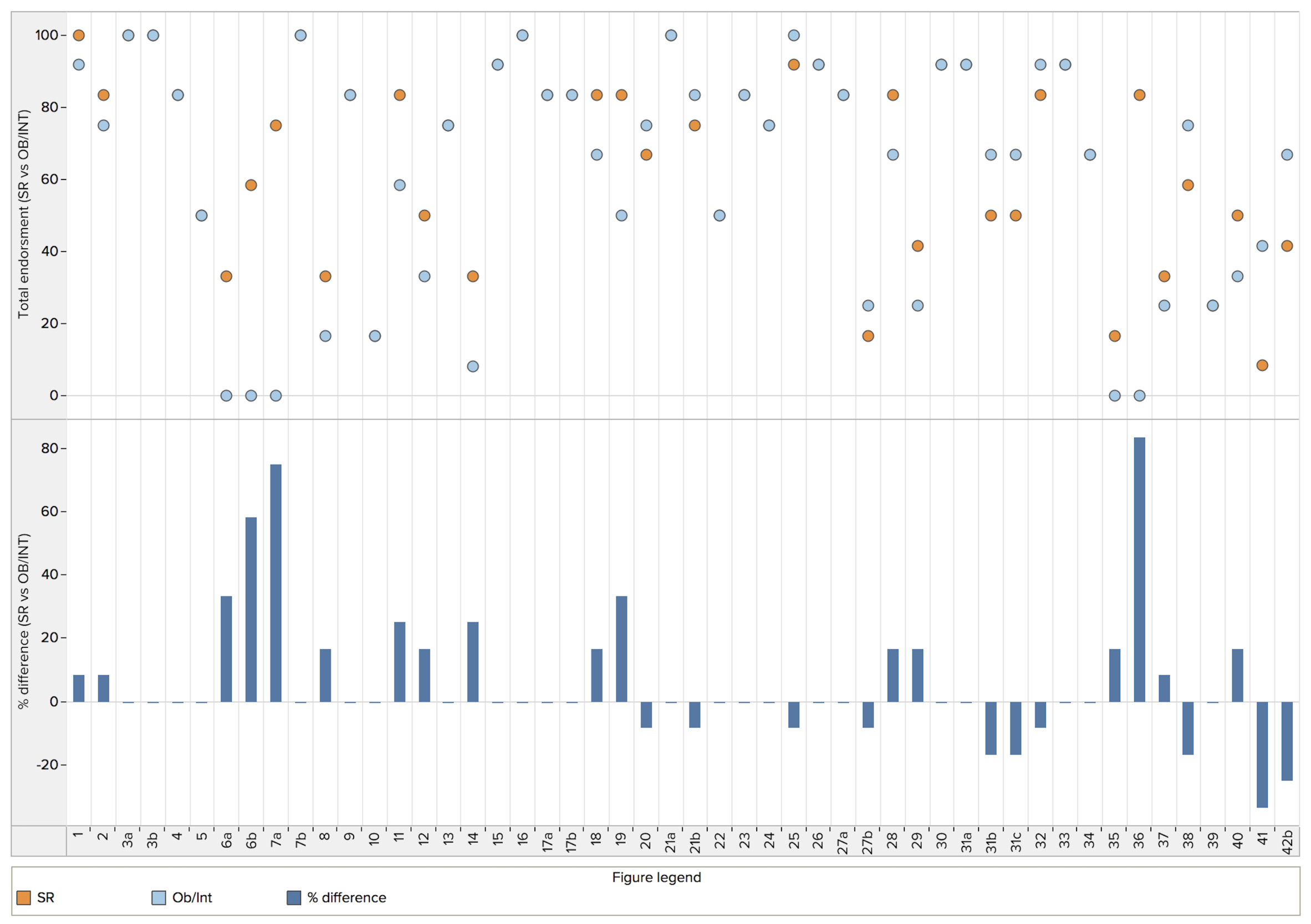

The convergent validity of the TPF-SR was evaluated by comparing the mean endorsement rates for items from observation/interviews (Phase One) with those obtained from the self-report administration (Phase Two). The mean endorsement rates and percentage differences across both measures are shown in Figure 2. There was good agreement between the self-report and observation/interviews with <25% differences in mean endorsement for 44/50 items and perfect agreement for 23/50 items. Despite substantial agreement for most items, there was >25% differences in mean endorsement for six items, namely (1) item 6a (Did you use any strategies for in-flight nutrition or hydration?); (2) item 6b (Did you use any strategies to manage or reduce jet lag?); (3) item 7a (Did you use any post-flight recovery strategies?); and, (4) item 36 (How many days before the first round did you arrive?); (5) item 19 (How many practice rounds did you complete for the tournament?); and, (6) item 41 (Did you contact a sports psychologist for a debrief after any of your rounds?). Overall the mean endorsement rates for self-report were consistently higher than for the observation/interviews.

Figure 3 shows the mean endorsement rates for items relative to all other items. Overall, the mean endorsement rates for items were relatively high, with ≥50% endorsement for 38/50 items from the TPF-SR.

Discussion

This study found initial evidence of the convergent validity of the newly developed TPF-SR instrument for golf. Direct comparisons of mean endorsement rates for items from the observation/interviews with self-report measures showed good agreement, indicating that the instrument was valid. However, there was some evidence of over-reporting of endorsement rates for self-report when compared to the observation/interviews. The proportion of players that endorsed items from the TPF-SR during a tournament was also high for most items.

The good agreement between self-report and observation/interviews provides tentative support for self-report as a valid measure to determine athletes’ behaviours over extended periods. Despite the indicated convergent validity, lower agreement in endorsement rates between the observation/interviews and self-report was observed for some items; this mainly arose from the over-reporting of “NA” responses. In particular, for item 6a (Did you use any strategies for in-flight nutrition or hydration?), item 6b (Did you use any strategies to manage or reduce jet lag?), item 7a (Did you use any post-flight recovery strategies?), and item 36 (How many days before the first round did you arrive?) there was poor agreement, as well as a considerable number of “NA” responses for the observation/interviews. Given that these items were related to travel, and the tournament used for the observation/interviews (i.e., Riversdale Cup) was local to all participants, these items were less relevant in this context – reflected by the high number of “NA” responses. In contrast, the tournaments used for self-report were mostly international; thus, travel-related items received higher endorsement levels.

Aside from the items related to travel, there were two other items (i.e., 19 and 41) that did not hold up well in terms of agreement between the two measures. For item 19 (How many practice rounds did you complete for the tournament?), the tournaments used by players for the self-report were mostly international, whereas, for the observation/interviews, many of the players lived nearby and had experience playing the course previously. Consequently, when self-administering the TPF-SR, players were less familiar with the performance environment, and thus performing multiple practice rounds was likely a higher priority. For item 41 (Did you contact a sports psychologist for a debrief after any of your rounds?), endorsement rates were higher for observation/interviews than for the self-report measures. During the collection of the observation/interview data (i.e., Phase One), a sport psychologist was on-site and working with players for several days of the competition. Indeed, this may have contributed to the higher rates of endorsement for this particular item, as amateur players typically do not have access to the financial resources to employ a dedicated sport psychologist (Pilgrim et al., 2018b).

Although the aforementioned contextual factors could explain some degree of over-reporting, there was still a tendency for players to over-report using self-report when compared to the observation/interviews. Like most self-report measures, the items from the TPF-SR were relatively transparent and susceptible to response distortion. That is, players could predict the favourable or “socially desirable” response and “fake good” to present a positive image of themselves (S. C. Smith et al., 2005). Acquiescent responding – participants’ tendency to agree with items regardless of content, could also have influenced research conclusions (Vaerenbergh & Thomas, 2012). Specifically, over-reporting can contribute to systematic error by inflating aggregate item endorsements and is recognised as a threat to validity (Vaerenbergh & Thomas, 2012). Several methods to mitigate response bias are available, such as (a) the use of social desirability scales to detect, minimize, and correct for bias; and (b) delivering clear instructions and highlighting the importance of honest responses (van de Mortel, 2008). At a minimum, future researchers and practitioners should be cognizant of the possibilities of response distortion when using the TPF-SR instrument.

In addition to the good agreement between self-report and observation/interviews, high endorsement rates were also recorded for most items. Irrespective of whether an instrument is reliable, if coaches, players, and administrators do not see its initial value, it is likely to receive limited uptake (Robertson, 2018). As elite players were found to perform items from the TPF-SR, it could demonstrate value to relevant stakeholders and positively influence their attitudes regarding the instrument. According to theories of volitional behaviour (i.e., Theory of Reasoned Action, Theory of Planned Behaviour), an important determinant of whether behaviours are performed is one’s attitudes or beliefs about the outcomes of performing such behaviours (Montaño & Kasprzyk, 2008). Thus, if an individual believes that positively valued outcomes will result from performing specific behaviours, then they will have positive attitudes towards those behaviours. If players or coaches believe that items from the TPF-SR are important for golf performance (i.e., associated with positive outcomes), it is likely they will allocate more value to the instrument and integrate such behaviours into current practice. There are several reasons why a self-report version of the TPF may have value practically. These include (a) athlete education (increased awareness of factors which may influence performance outside of training); (b) improved self-management of behaviour (i.e., accountability); (c) to promote the adoption of self-regulatory behaviours (encourages athletes to reflect upon performance and provides detailed information to support this process); and, (d) to support the collection of normative data for athlete benchmarking (Saw et al., 2015).

A limitation of this study was that the observation/interview data was not collected concurrently to the self-report; that is, none of the players self-administered the TPF-SR for the same tournament used for the observation/interviews. This disconnect may have reduced the accuracy of any direct comparisons between the two measures as differences in the agreement reflect rather than the endorsement associated with specific items. Future studies should look to investigate the TPF-SR using direct comparisons between measures over longitudinal periods. Work to further assess the measurement properties of the TPF-SR is also warranted. For instance, if players of higher ability levels were found to perform more items or specific items from the TPF-SR than their lower-level counterparts, it could support the instrument’s discriminative validity. Combined with this study’s findings, such information could provide a complete picture of construct validity for the TPF-SR instrument. To improve the validity of information regarding the preparatory behaviours of sports performers for research and practice, it may also be beneficial to investigate the application and feasibility of developing technologies such as wearable sensors or real-time behavioural monitoring.

Conclusions

The TPF-SR instrument displays initial evidence of convergent validity and can be self-administered by players to record pre- and within-tournament behaviours. The instrument encompasses a variety of task-specific and self-regulatory items and could be used to improve player’s awareness of preparatory behaviours and to promote adaptive self-regulatory behaviours (i.e., reflecting upon performance, self-monitoring, etc.). Further, it provides an instrument for future studies to examine the tournament preparation behaviours of players of different ability levels. These findings also offer conceptual support/guidance for research that may investigate similar constructs or develop self-report versions of established frameworks. Evaluating other measurement properties, such as discriminant validity by comparing the TPF-SR instrument’s ability to discriminate between players of different ability levels, is an obvious next step for future work in this area.

_for_items_from_the_tpf-sr._ite.png)

_for_items_from_the_tpf-sr._ite.png)